Why Google Cloud Compute?

Most companies use data centers because they offer cost predictability, hardware certainty, and control. However, running and maintaining resources in a data center also requires a lot of overhead, including:

Capacity: enough resources to scale as needed, and efficient use of those resources.

Security: physical security to protect assets, as well as network- and OS-level security.

Network infrastructure: components such as wiring, switches, routers, firewalls, and load balancers.

Support: skilled employees to perform installation and maintenance and to address issues.

Bandwidth: suitable bandwidth for peak load.

Facilities: physical infrastructure, including equipment and power.

Fully featured cloud platforms such as Cloud Platform help remove much of the overhead surrounding these physical, logistical, and human-resource-related concerns, and can help reduce many of the related business costs in the process. Because Cloud Platform is built on Google's infrastructure, it also offers additional benefits that would typically be cost-prohibitive in a traditional data center, including:

A global network

Built-in, multi-regional redundancy

Multiple data-center regions and zones across the globe help ensure strong redundancy and availability. This is especially crucial when there is a specific need for international and multiple geographic zone expansion/development.

Fast, dependable scaling

Cloud Platform is designed to scale like Google’s own products, even when you experience a huge traffic spike. Managed services such as Google App Engine, Google Compute Engine's autoscaler, and Google Cloud Datastore give you automatic scaling that helps your application to grow and shrink its capacity as needed.

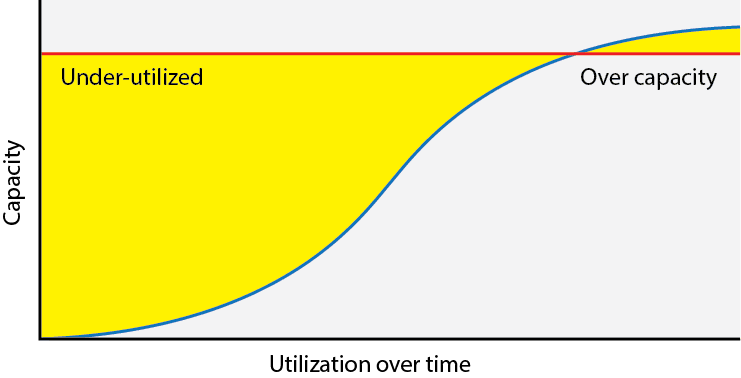

Capacity and bandwidth

In a traditional data center, you have to plan out your resource needs, acquire enough resources upfront to scale as needed, and manage your capacity and workload distributions carefully within those resource limits. Due to the nature of pre-provisioned resources, no matter how carefully you manage your capacity, you may end up with suboptimal utilization:

Figure 1: Utilization of pre-provisioned resources over time

In addition, this pre-provisioning of resources means that you have a hard ceiling on resources. If you need to scale beyond that, you're out of luck.

Cloud Platform helps resolve many of these utilization issues and scalability thresholds. You can scale up and scale down your VM instances as needed. Because you pay for what you use on a per-second basis, you can optimize your costs without having to pay for excess capacity you don't need all the time, or need only at peak traffic times.

Security

The Google security model is an end-to-end process, built on over 18 years of experience focused on keeping customers safe on Google applications like Gmail and Google Apps. In addition, Google’s site reliability engineering teams oversee operations of the platform systems to help ensure high availability and prevent abuse of platform resources.

Network infrastructure

In a traditional data center, you manage a complex network setup, including racks of servers, storage, multiple layers of switches, load balancers, routers, and firewall devices. You must set up, maintain, and monitor software and detailed device configurations. In addition, you have to worry about the security and availability of your network, and you have to add and upgrade equipment as your networking needs grow.

In contrast, Cloud Platform uses a software-defined networking (SDN) model, allowing you to configure your networking entirely through Cloud Platform's service APIs and user interfaces. You don't have to pay for or manage data-center networking hardware. For more details about Google's SDN stack, Andromeda, see the Enter the Andromeda zone .

Facilities and support

When you use Cloud Platform, you no longer need to worry about installing or maintaining physical data-center hardware, nor do you need to worry about having the skilled technicians to do so. Google takes care of both the hardware layer and the technicians, allowing you to focus on running your application.

Compliance

Google undergoes regular independent third-party audits to verify that Cloud Platform is in alignment with security, privacy, and compliance controls. Cloud Platform has regular audits for standards such as ISO 27001, ISO 27017, ISO 27018, SOC 2, SOC 3, and PCI DSS.

Focusing on Security benefits

The world economy suffers billions of dollars of losses over data breaching, theft or destruction every year. For this reason, the main actors of the Cloud industry and GCP have been striving to achieve compliance with such reputable standards as:

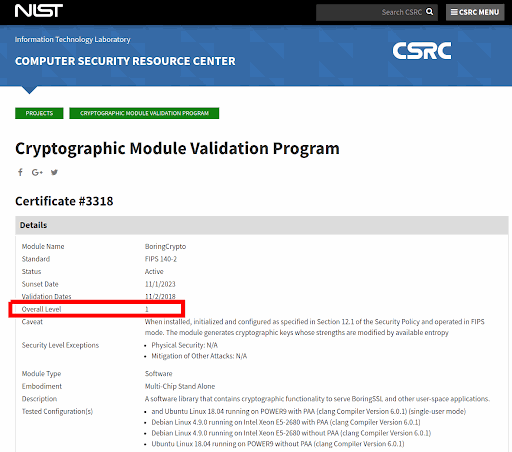

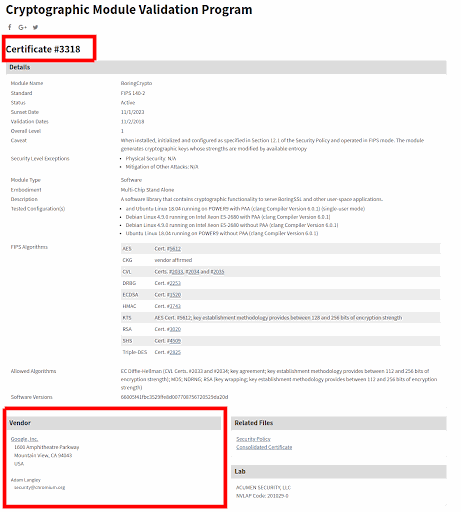

FIPS 140-2

ISO/IEC 27001

PCI DSS

ISO 27017

ISO 27018, SOC 2, SOC 3

Encryption of all data at rest and in transit

Google Cloud Computing has made millions of dollars of investment over these past years so that it could comply with level 1 FIPS, as you can check it here and here.

As a reminder, FIPS are Federal Information Processing Standard, which is among the most demanding in the world, initially set up by the American administration in order to keep their secrets and infrastructures safe.

The following are implemented by GCP and most likely NOT implemented at the local network level of an “on-premise installations”:

| Technology | Google Cloud Compute | Standard DIY corporate implementation |

| Local SSD storage product is automatically encrypted with NIST-approved ciphers. | Applied on all storage | RARELY implemented |

| Automatic encryption of traffic between internal and external data locations using NIST-approved encryption algorithms. | Applied on all data transfers | RARELY implemented |

| When the clients connect to the infrastructure, their TLS clients must be configured to require the use of secure FIPS-compliant algorithms; if the TLS client and infrastructure owner TLS services agree on an encryption method that is incompatible with FIPS, a non-validated encryption implementation will be used. | Applied on all connections | VERY RARELY implemented |

| Applications you build and operate on the production infrastructure might include their own cryptographic implementations; in order for the data they process to be secured with a FIPS-validated cryptographic module, you must integrate such an implementation yourself. | Ready to Use | VERY RARELY implemented |

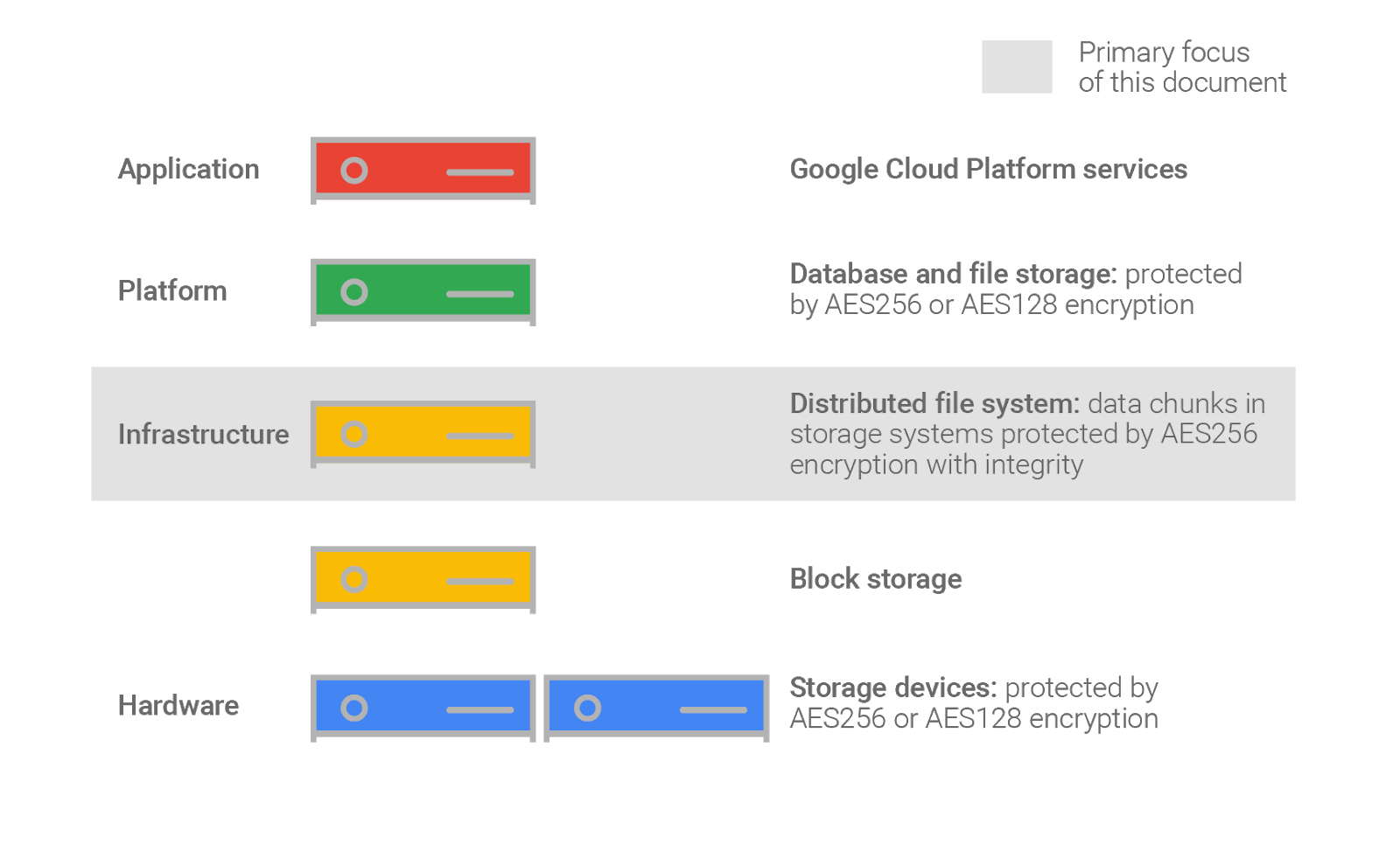

Google Cloud environment and encryption at rest

CIO level

| Technology | Google Cloud Compute | Standard local implementation |

| Google uses several layers of encryption to protect customer data at rest in Google Cloud Platform products. | Applied as a Standard | RARELY implemented |

| Google Cloud Platform encrypts customer content stored at rest, without any action required from the customer, using one or more encryption mechanisms. There are some minor exceptions, noted further in this document. | Applied as a Standard | RARELY implemented |

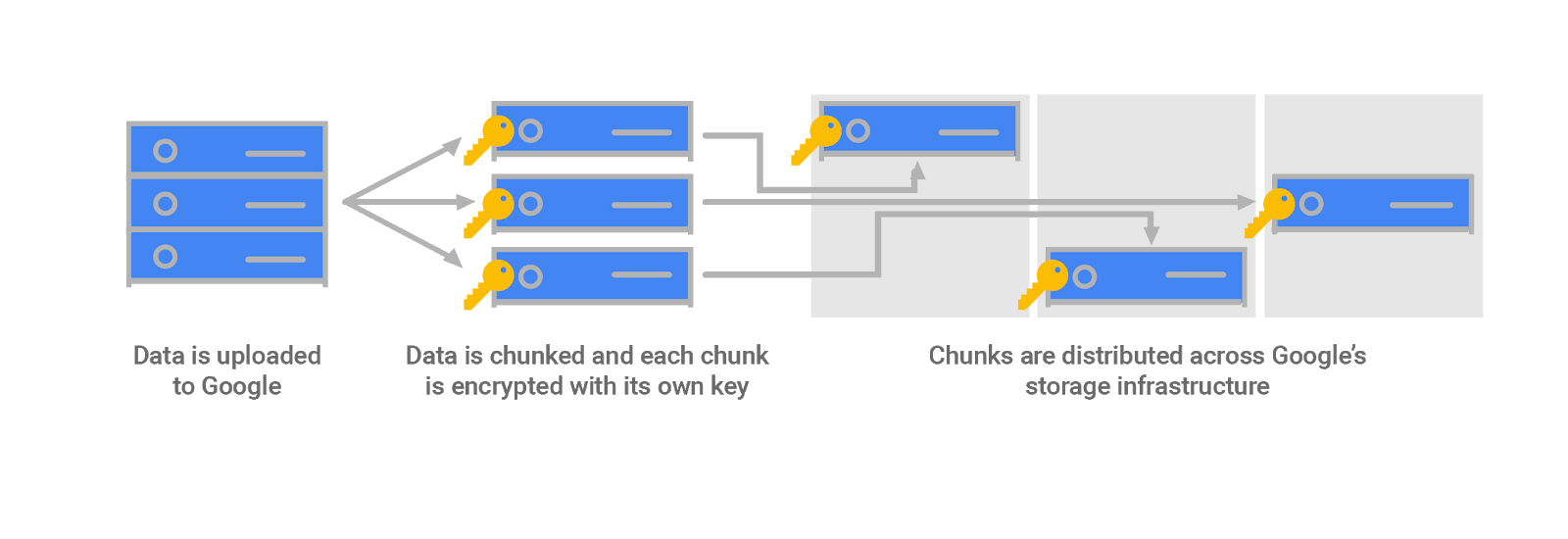

| Data for storage is split into chunks, and each chunk is encrypted with a unique data encryption key. These data encryption keys are stored with the data, encrypted with ("wrapped" by) key encryption keys that are exclusively stored and used inside Google's central Key Management Service. Google's Key Management Service is redundant and globally distributed. | Achieved as a Standard | VERY RARELY implemented |

| Data stored in Google Cloud Platform is encrypted at the storage level using either AES256 or AES128. | Achieved as a Standard | VERY RARELY implemented |

| Google uses a common cryptographic library, Tink, to implement encryption consistently across almost all Google Cloud Platform products. Because this common library is widely accessible, only a small team of cryptographers needs to properly implement and maintain this tightly controlled and reviewed code. | Achieved as a Standard | VERY RARELY implemented |

Layers of encryption

Google uses several layers of encryption to protect data. Using multiple layers of encryption adds redundant data protection and allows us to select the optimal approach based on application requirements.

Each chunk is distributed across Google's storage systems and is replicated in encrypted form for backup and disaster recovery. A malicious individual who wanted to access customer data would need to know and be able to access (1) all storage chunks corresponding to the data they want, and (2) the encryption keys corresponding to the chunks.

Google uses the Advanced Encryption Standard (AES) algorithm to encrypt data at rest. AES is widely used because (1) both AES256 and AES128 are recommended by the National Institute of Standards and Technology (NIST) for long-term storage use (as of March 2019), and (2) AES is often included as part of customer compliance requirements.

Data stored across Google Cloud Storage is encrypted at the storage level using AES, in Galois/Counter Mode (GCM) in almost all cases. This is implemented in the BoringSSL library that Google maintains. This library was forked from OpenSSL for internal use, after many flaws were exposed in OpenSSL. In select cases, AES is used in Cipher Block Chaining (CBC) mode with a hashed message authentication code (HMAC) for authentication; and for some replicated files, AES is used in Counter (CTR) mode with HMAC. (Further details on algorithms are provided later in this document.) In other Google Cloud Platform products, AES is used in a variety of modes.

| Technology | Google Cloud Compute | Standard local implementation |

| Native multi-layered encryption | Applied as a Standard | VERY RARELY achieved |

| Native AES encryption with CTR mode | Applied as a Standard | VERY RARELY achieved |

| Native GCM | Applied as a Standard | VERY RARELY achieved |

Encryption at the storage device layer

In addition to the storage system level encryption described above, in most cases data is also encrypted at the storage device level, with at least AES128 for hard disks (HDD) and AES256 for new solid-state drives (SSD), using a separate device-level key (which is different than the key used to encrypt the data at the storage level). As older devices are replaced, solely AES256 will be used for device-level encryptionBackup encryptions

Google's backup system ensures that data remains encrypted throughout the backup process. This approach avoids unnecessarily exposing plaintext data.In addition, the backup system further encrypts each backup file independently with its own data encryption key (DEK), derived from a key stored in Google's Key Management Service (KMS) plus a randomly generated per-file seed at backup time. Another DEK is used for all metadata in backups, which is also stored in Google's KMS.

| Technology | Google Cloud Compute | Standard local implementation |

| On the fly backup flow encryption | Achieved as a Standard | VERY RARELY implemented |

| Storage Backup encryption | Achieved as a Standard | VERY RARELY implemented |

Key Management by GCP

The key used to encrypt the data in a chunk is called a data encryption key (DEK). Because of the high volume of keys at Google, and the need for low latency and high availability, these keys are stored near the data that they encrypt. The DEKs are encrypted with (or "wrapped" by) a key encryption key (KEK). One or more KEKs exist for each Google Cloud Platform service. These KEKs are stored centrally in Google's Key Management Service (KMS), a repository built specifically for storing keys. Having a smaller number of KEKs than DEKs and using a central key management service makes storing and encrypting data at Google scale manageable, and allows us to track and control data access from a central point.

For each Google Cloud Platform customer, any non-shared resources are split into data chunks and encrypted with keys separate from keys used for other customers. These DEKs are even separate from those that protect other pieces of the same data owned by that same customer.

DEKs are generated by the storage system using Google's common cryptographic library. They are then sent to KMS to wrap with that storage system's KEK, and the wrapped DEKs are passed back to the storage system to be kept with the data chunks. When a storage system needs to retrieve encrypted data, it retrieves the wrapped DEK and passes it to KMS. KMS then verifies that this service is authorized to use the KEK, and if so, unwraps and returns the plaintext DEK to the service. The service then uses the DEK to decrypt the data chunk into plaintext and verify its integrity.

Most KEKs for encrypting data chunks are generated within KMS, and the rest are generated inside the storage services. For consistency, all KEKs are generated using Google's common cryptographic library, using a random number generator (RNG) built by Google. This RNG is based on NIST 800-90Ar1 CTR-DRBG and generates an AES256 KEK. This RNG is seeded from the Linux kernel's RNG, which in turn is seeded from multiple independent entropy sources. This includes entropic events from the data center environment, such as fine-grained measurements of disk seeks and inter-packet arrival times, and Intel's RDRAND instruction where it is available (on Ivy Bridge and newer CPUs).

Data stored in Google Cloud Platform is encrypted with DEKs using AES256 or AES128, as described above; and any new data encrypted in persistent disks in Google Compute Engine is encrypted using AES256. DEKs are wrapped with KEKs using AES256 or AES128, depending on the Google Cloud Platform service. We are currently working on upgrading all KEKs for Cloud services to AES256.

Google's KMS manages KEKs, and was built solely for this purpose. It was designed with security in mind. KEKs are not exportable from Google's KMS by design; all encryption and decryption with these keys must be done within KMS. This helps prevent leaks and misuse, and enables KMS to emit an audit trail when keys are used.

| Technology | Google Cloud Compute | Standard local implementation |

| KMS integration | Applied as a Standard | VERY RARELY implemented |

| AES256 KEK | Applied as a Standard | VERY RARELY achieved |

Network & communication

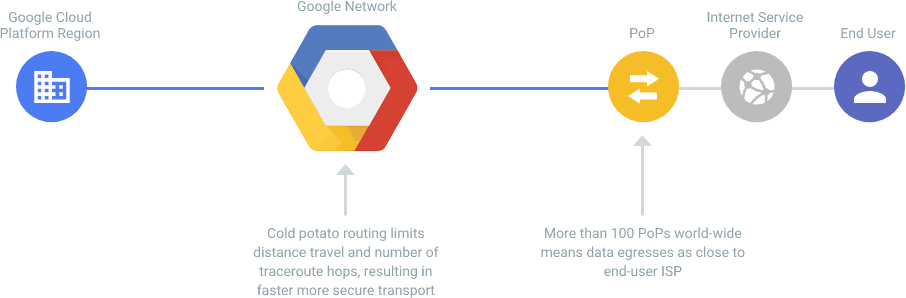

Premium Tier benefits

Full network ownership from one location to another is something most companies cannot afford. Yet, Google Cloud Compute is capable of achieving full end-to-end ownership and control.

| Technology | Google Cloud Compute | Standard DIY corporate implementation |

| Full end-to-end network ownership | Achieved as a Standard | VERY RARELY implemented |

| Over 100 PoPs Worldwide to offer extensive coverage and network redundancy /resilience | Achieved as a Standard | VERY RARELY implemented |

Operational stability / Serenity / SLA

High standards at the SLA level

Google Cloud Compute is among the few Cloud providers capable of boasting a verified SLA superior to 99% on all its services.

| Covered Service | Monthly Uptime Percentage |

| Instances in Multiple Zones | >= 99.99% |

| A Single Instance | >= 99.5% |

| Load balancing | >= 99.99% |

If Google does not meet the Service Level Objective (SLO), and if the Customer meets its obligations under this SLA, the Customer will be eligible to receive the Financial Credits described below. This SLA states the Customer’s sole and exclusive remedy for any failure by Google to meet the SLO. Capitalized terms used in this SLA, but not defined in this SLA, have the meaning set forth in the Agreement. If the Agreement authorizes the resale or supply of Google Cloud Platform under a Google Cloud partner or reseller program, then all references to Customer in this SLA mean Partner or Reseller (as applicable), and any Financial Credit(s) will only apply for impacted Partner or Reseller order(s) under the Agreement.

| Technology | Google Cloud Compute | Standard local implementation |

| Single instance/server implementation 99.5% SLA (meaning 354,5 Days of uptime per year) | Achieved as a Standard | RARELY achieved |

| Multiple instance (redundant load-balanced) / server implementation 99.5% SLA (meaning 364,96 Days of uptime per year) | Achieved as a Standard | VERY RARELY achieved |

| Lifetime SLA | Achieved as a Standard | VERY RARELY achieved |

High Availability standards

Achieving the goals of 100% availability implies that your infrastructure is capable of reaching the following standards:

| Technology | Google Cloud Compute | Standard local implementation |

| Self-managed hardware capacity | Achieved as a Standard | RARELY achieved |

| Auto-scalability | Achieved as a Standard | VERY RARELY achieved |

| Automated resource provisioning | Achieved as a Standard | VERY RARELY achieved |

| Multiple redundancy (storage) | Achieved as a Standard | VERY RARELY achieved |

| Multiple redundancy (Computing instances / Servers) | Achieved as a Standard | VERY RARELY achieved |

| Multiple redundancy (Power Sources) | Achieved as a Standard | VERY RARELY achieved |

| Multiple redundancy (Power Sources) | Achieved as a Standard | VERY RARELY achieved |

| Multiple redundancy (storage) | Achieved as a Standard | VERY RARELY achieved |

| Load-balancing technology | Achieved as a Standard | VERY RARELY achieved |

| Real-time data replication on passive storage (data/files) | Achieved as a Standard | VERY RARELY achieved |

| Real-time data replication on dynamic storage (databases) | Achieved as a Standard | VERY RARELY achieved |

Port Cities - Helping you select the most suitable hosting solution for your business

Port Cities cooperates with the best cloud service providers in the world, including Google Cloud Platform, to make sure your ERP is accessible anytime, from anywhere. We also ensure our hosting solutions meet the most demanding criteria of your ERP system, in terms of security, capacity or operational stability. In any case, our server team is ready to discuss with you the most suitable server hosting solution for your business.

.